How we’re building GC Forms: Our 4 accessible approaches

When building products for government, accessibility isn’t just about compliance, it’s good strategy too. Making space for this work is crucial — it supports the government in delivering improved services and removes barriers to access them.

GC Forms is one of the tools we offer federal public servants to support their digital service delivery. It’s easy to use and helps quickly create and manage online forms that are secure, bilingual, accessible, and Government of Canada branded.

To share how the team prioritizes accessibility in every feature and phase of this growing product, we interviewed team members Bryan Robitaille (Principal Developer), Stevie-Ray Talbot (Senior Product Manager), and Sarah Hobson (Senior Policy Advisor).

They shared thoughts and tips on the team’s 4 approaches to building in accessibility from the start:

- Setting-up automated tests to catch the majority of issues;

- Performing manual tests to catch issues missed in the automated tests;

- Conducting design research to see if the automated and manual tests created accessible user experiences; and

- Running audits to find ways to check work, improving the current product and its development processes.

Q1: How do automated accessibility tests help with development?

A: Bryan Robitaille (Principal Developer)

Automated testing isn’t perfect but it’s a start

Let’s be honest, automated accessibility tests aren’t perfect. They don’t catch poor design choices or all of the Web Content Accessibility Guidelines (WCAG) use cases and success criteria. But they do offer a quick and simple way to catch over 50% of accessibility issues on web applications.

When you combine automated testing with other accessibility testing, it can reduce the team’s time spent on manual testing and fixes. This is very helpful for increasing our team’s capacity to improve and grow the product.

Want to learn how our team sets up and runs automated accessibility testing on GC Forms? Check out the tech tips section below.

Tech tips: How we set-up our automated testing

1. Set up automated tests to catch accessibility issues; it reduces manual work and increases the team’s capacity for professional and product growth.

For our automated testing, we rely heavily on the open-source Axe accessibility testing engine. Axe integrates seamlessly with our Cypress testing suite, allowing us to test web pages exactly as people would interact with them. If we didn’t have an automation set up to catch these issues, it would add a lot of manual work for our developers and reduce their capacity to grow the product.

2. Use an accessibility linter to identify issues as you’re writing code (it’s like spell check). This saves time re-writing.

We don’t solely rely on Axe’s engine for our automated testing. We also leverage tools that try to identify issues as we write our application code, saving us time rewriting after testing (because we caught the issues in advance).

For example, we use the jsx-a11y ESLint plugin to help identify issues as we build our web application. Some of our developers also go a step further and use the Axe Accessibility Linter (it’s like spell check but for accessibility issues) as an extension in VS Code, which can provide accessibility linting for HTML, Angular, React, Markdown, and Vue.

3. Set up your accessibility test file and add a few lines of code (step-by-step instructions for both are below).

To set up the file used for running automated tests, you need to add software packages to your existing development dependencies (the other software packages used in development work, not just testing ones). Add them using a package manager – it keeps track of all the software on your computer, so you can use the new testing packages in your automation.

Here’s how (step-by-step):

- Using the yarn or npm package managers, search and add these software packages to your development dependencies: cypress, axe-core, and cypress-axe.

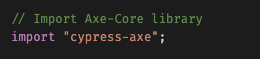

- Add one simple configuration line in Cypress itself: import “cypress-axe”;

Alt Text: Screenshot of the line configuration in Cypress. Add “import “cypress-axe”;” under “// Import Axe-Core library

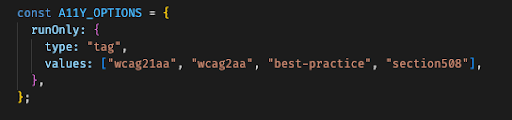

3. Configure your Axe options in your test file (read Axe’s API documentation for guidance).

Alt Text: Screenshot of how GC Forms configured their Axe options, testing for: “wcag21aa”, “wcag2aa”, “best-practice”, and “section508”Alt Text: Screenshot of how GC Forms configured their Axe options, testing for: “wcag21aa”, “wcag2aa”, “best-practice”, and “section508”

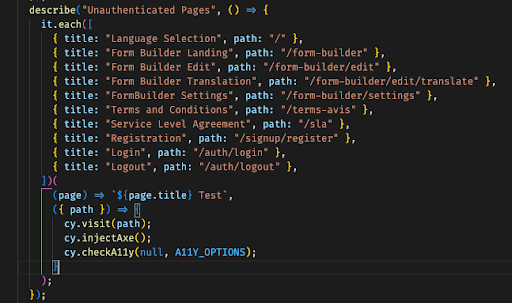

Also, as you can see in our Cypress testing file on GitHub, setting up Axe with Cypress for automated accessibility testing is accomplished by adding as little as 3 lines of code:

- cy.visit(path);

- cy.injectAxe();

- cy.checkA11y(null, A11Y_OPTIONS);

Alt Text: Screenshot of the 3 lines of code in GC Forms’ Cypress testing file: “cy.visit(path); cy.injectAxe(); cy.checkA11y(null, A11Y_OPTIONS);”.

Q2: The team also runs manual accessibility tests – why both?

A: Bryan Robitaille (Principal Developer)

Manual tests help catch issues missed by automations

We strongly believe that application development teams should integrate at least some form of manual accessibility testing into their development process. Relying on automatic testing alone won’t catch all accessibility issues.

Along our journey, we’ve tried implementing many different manual testing processes. Some worked well, while others did not. Each team is different! However, after much trial and error, the right balance for our team is the “try it as you code it” approach, doing our best to test along the way.

Want to learn how our team approaches manual accessibility testing on GC Forms? Check out the tech tips section below.

Tech tips: Our “try it as you code it” manual test approach

1. Train developers on at least one assistive technology to bridge knowledge gaps between a web application’s appearance and functionality.

Developers often focus on the visual or sighted version of a web application. They may put more effort into the appearance of an HTML component and interaction, than into ensuring the component follows best practices in HTML layout and labelling.

We strongly encourage our developers to familiarize themselves with at least one assistive technology (NVDA, JAWS, VoiceOver, etc) – enough to be able to navigate and interact with a web application. When we asked our developers to use an assistive technology to navigate GC Forms, an interesting thing happened: they became frustrated. Re-framing their mindset from focusing on specific WCAG success criteria to focusing on user experiences showed improvements were needed, like providing more informational context.

2. Update the team’s development processes to assign responsibilities around usability checks.

In our development process, each developer is responsible for the usability of components they’re building or modifying. They need to ensure components are accessible both visually and with assistive technologies.

With this process update, we quickly saw a shift of focus away from ensuring a visually perfect box-shadow, to thinking of ways to improve the interactions of components they’re building. This greatly increased the usability of our complex web application interactions, like uploading a file in a web form and submitting a web form after a set period of time (spam prevention).

Q3: How does design research improve product accessibility?

A: Sarah Hobson (Senior Policy Advisor)

Design research tests our assumptions

Accessibility tests and audits run against set parameters, but human behaviours and experiences are diverse and evolving – people can’t be accurately captured in that way. So while the test parameters can help us catch common design issues, we can’t replace direct engagement with people who use assistive technologies to navigate the internet.

Design research enables us to test our assumptions and better understand the impact of previous design and development decisions on real people. This feedback increases our confidence that passing tests and audits actually reflects an accessible user experience.

Are you a public servant interested in conducting design research on your product or service? Check out the research resources section below for guidance.

Research resources: Design accessible product experiences

Guidance to help with GC design research

We’ve created resources to help plan your testing methods and run research interviews, and we also offer a service to help you generate privacy and consent forms for research sessions.

How design research improved experiences using GC Forms

When developing the forms product, we conducted design research to test our assumptions about accessibility. This included running two design research activities with 12 people who use assistive technologies to navigate government services. We had 10 users complete a mock form and provide written feedback about their experiences completing the tasks. We also had 2 users narrate their experiences as they navigated and completed the form, providing us more detailed information on their user journey.

Each research participant used their preferred assistive technology and browser:

- Dragon NaturallySpeaking with Chrome;

- JAWS with Edge;

- MAC built in screen reader with Safari;

- NVDA with Chrome;

- NVDA with Internet Explorer 11;

- OS Magnification with Firefox;

- On-screen keyboard with Chrome;

- On-screen keyboard with Internet Explorer 11;

- Voice Control with Firefox;

- VoiceOver with Safari; and

- ZoomText with Edge.

One of the insights from these activities is that people don’t know what “Alpha” means, so it was confusing that the banner on our forms had “Alpha: This site will change as we test ideas.”

“I find that the word alpha is a little bit out of context because I can’t think of what it would be related to and where in the form it would be relevant” – design research participant

It was clear that while “Discovery, Alpha, Beta, and Live” mean something to us (they’re agile development stages for product releases), it doesn’t necessarily mean the same to people completing GC Forms. So we removed it, avoiding the unnecessary distraction and reducing confusion for users.

Q4: How do accessibility audits improve product experiences?

A: Stevie-Ray Talbot (Senior Product Manager)

Auditing for accessibility gaps

Call it cliché, but as the Product Manager, I like checklists. And that’s what the Web Content Accessibility Guidelines (WCAG) are – one giant checklist: check that it works for people using screen readers, people who get distracted or need more guidance, people with colour blindness, and the list of varying user experiences goes on. There are lots of things to check to make sure the product is accessible.

Doing automatic and manual testing reduces the amount of accessibility issues found in a product, but there will still be gaps. It’s not realistic to test with every assistive technology, every feature, every sprint (two weeks). That’s where we rely on some help from third parties. Audits help us build our confidence, show us where gaps are, reveal opportunities for training and learning, and make us think about how to improve our processes.

Want to learn more about making WCAG an integral part of your product processes? Check out the tech tip section below.

Tech tip: Integrating WCAG into development and testing processes

Tip from our experience: the WCAG checklist is too big for any one person to keep in mind all the time – try breaking down the requirements into smaller bits of work and integrating them into different parts of your product processes.

To help prompt thoughts around accessible experiences, we have placeholders in every user story, unique to the work.

For example, when we’re developing a new component (such as a button to upload files), we make sure it’s keyboard navigable and that a screen reader provides the information needed to upload a document. We implemented the navigation check as part of the “definition of done” and “acceptance criteria”.

Share your experiences with us!

We hope that sharing the GC Forms team’s 4 accessible approaches to product development (automated and manual testing, design research, and audits) will inspire others to start small and experiment. As Bryan said: each team is different, so find out what works best in your context.

We’d also like to recognize the people that helped make all of this possible. They helped us through the procurement process, audit, design research, and training. Thanks to Mario Garneau (Head of Design System and Platform Delivery), Adrianne Lee (Design Researcher, GC Notify), and Jesse Burcsik (Team Wellness and Development Lead, Platform) for all your support.

Setting up automations and updating processes helps us build accessibility in from the start. We’re taking this approach on our new self-serve tool that helps public servants quickly and easily build forms. It’s a simple and accessible way to collect feedback, request information, register for an event, and more (data collection: up to Protected A).

As we continue to improve the forms tool, we’d love your input. Hearing about others’ experiences with accessible approaches and their feedback on the product helps us make it more useful.

Contact us so we can learn from you too (FYI: this feedback form was made using GC Forms)!